Are you looking for an answer to the topic “weighted mse loss“? We answer all your questions at the website Chambazone.com in category: Blog sharing the story of making money online. You will find the answer right below.

Keep Reading

What is weighted MSE?

Weighting MSE is a way to give more importance to some prediction errors than to others in the overall score. This is useful if you are using MSE as a performance metric for your model, especially during the model training (loss function) or validation (hyper-parameter setting).

How is MSE loss calculated?

Mean squared error (MSE) loss is calculated by taking the difference between `y` and our prediction, then square those values. We take these new numbers (square them), add all of that together to get a final value, finally divide this number by y again. This will be our final result.

Weighted Least Squares: an example

Images related to the topicWeighted Least Squares: an example

What does MSE loss mean?

Mean squared error (MSE) is the most commonly used loss function for regression. The loss is the mean overseen data of the squared differences between true and predicted values, or writing it as a formula.

How do I use MSE loss function?

The Mean Squared Error (MSE) is perhaps the simplest and most common loss function, often taught in introductory Machine Learning courses. To calculate the MSE, you take the difference between your model’s predictions and the ground truth, square it, and average it out across the whole dataset.

What is weighted RMSE?

weighted.rmse <- function(actual, predicted, weight){ sqrt(sum((predicted-actual)^2*weight)/sum(weight)) } Tim’s answer is only valid if weights sum to 1, but this function generalizes it so that it is valid with any (non-normalized) set of weights.

What is a good MSE score?

There is no correct value for MSE. Simply put, the lower the value the better and 0 means the model is perfect.

How do you calculate MSE in Excel?

- Step 1: Enter the actual values and forecasted values in two separate columns. What is this? …

- Step 2: Calculate the squared error for each row. Recall that the squared error is calculated as: (actual – forecast)2. …

- Step 3: Calculate the mean squared error.

See some more details on the topic weighted mse loss here:

Need of Weighted Mean Squared Error – Data Science Stack …

Weighting MSE is a way to give more importance to some prediction errors than to others in the overall score.

How To Implement Weighted Mean Square Error in Python?

When handling imbalanced data, a weighted mean square error can be a vital performance metric. … ML | Log Loss and Mean Squared Error.

A new weighted MSE loss for wind speed forecasting based …

In this work, we propose a new weighted MSE loss function for wind speed forecasting based on deep learning. As is well known, the training procedure is …

Let’s define a new criterion: Weighted MSE loss function

I hope to implement a criterion function that performing the weighted MSE. This criterion will be very useful when we solving some multi-output regression …

Is MSE the same as variance?

The variance measures how far a set of numbers is spread out whereas the MSE measures the average of the squares of the “errors”, that is, the difference between the estimator and what is estimated. The MSE of an estimator ˆθ of an unknown parameter θ is defined as E[(ˆθ−θ)2].

Why do we use MSE for regression?

The mean squared error (MSE) tells you how close a regression line is to a set of points. It does this by taking the distances from the points to the regression line (these distances are the “errors”) and squaring them. The squaring is necessary to remove any negative signs.

Why is MSE bad for classification?

There are two reasons why Mean Squared Error(MSE) is a bad choice for binary classification problems: First, using MSE means that we assume that the underlying data has been generated from a normal distribution (a bell-shaped curve). In Bayesian terms this means we assume a Gaussian prior.

When should we use MSE?

The Mean Squared Error is used as a default metric for evaluation of the performance of most regression algorithms be it R, Python or even MATLAB. 5. Root Mean Squared Error (RMSE): The only issue with MSE is that the order of loss is more than that of the data.

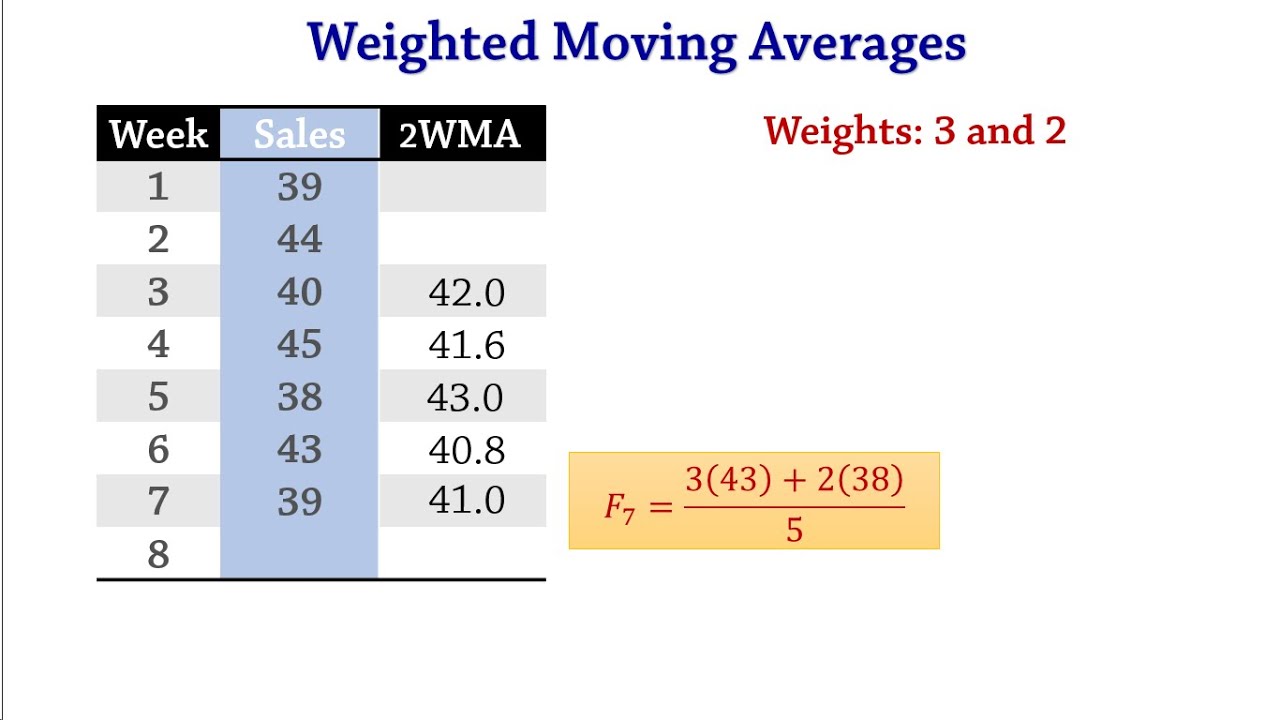

Forecasting: Weighted Moving Averages, MAD

Images related to the topicForecasting: Weighted Moving Averages, MAD

Why cross entropy loss is better than MSE?

1 Answer. Cross-entropy loss, or log loss, measure the performance of a classification model whose output is a probability value between 0 and 1. It is preferred for classification, while mean squared error (MSE) is one of the best choices for regression. This comes directly from the statement of your problems itself.

Why do we use MSE instead of Mae?

MSE is a differentiable function that makes it easy to perform mathematical operations in comparison to a non-differentiable function like MAE. Therefore, in many models, RMSE is used as a default metric for calculating Loss Function despite being harder to interpret than MAE.

How is Huber loss calculated?

…

We do this as follows:

- Calculate error which is y_true – y_pred.

- If tf. abs(error) < 1 , then is_small_error is True . …

- Define squared_loss as tf. square(error) / 2 .

- Define linear_loss as tf. abs(error) – 0.5 .

- Use tf. …

- Thus, return the huber loss for each prediction.

Why is MSE sensitive to outliers?

RMSE is more sensitive to the examples with the largest difference This is because the error is squared before the average is reduced with the square root. RMSE is more sensitive to ouliers: so the example with the largest error would skew the RMSE. MAE is less sensitive to outliers.

What is weighted variance?

The weighted variance is found by taking the weighted sum of the squares and dividing it by the sum of the weights.

Which one is the best metrics out of MSE and MAE Why explain?

MSE is highly biased for higher values. RMSE is better in terms of reflecting performance when dealing with large error values. RMSE is more useful when lower residual values are preferred. MAE is less than RMSE as the sample size goes up.

Is Lower MAE better?

Both the MAE and RMSE can range from 0 to ∞. They are negatively-oriented scores: Lower values are better.

What is acceptable RMSE?

Based on a rule of thumb, it can be said that RMSE values between 0.2 and 0.5 shows that the model can relatively predict the data accurately. In addition, Adjusted R-squared more than 0.75 is a very good value for showing the accuracy. In some cases, Adjusted R-squared of 0.4 or more is acceptable as well.

What is MSE in Excel regression?

The Mean Squared Error (MSE) is an estimate that measures the average squared difference between the estimated values and the actual values of a data distribution. In regression analysis, the MSE calculates the average squared differences between the points and the regression line.

Neural Networks Part 6: Cross Entropy

Images related to the topicNeural Networks Part 6: Cross Entropy

How is SSE and MSE calculated?

Sum of squared errors (SSE) is actually the weighted sum of squared errors if the heteroscedastic errors option is not equal to constant variance. The mean squared error (MSE) is the SSE divided by the degrees of freedom for the errors for the constrained model, which is n-2(k+1).

What does MSE mean in forecasting?

In Statistics, Mean Square Error (MSE) is defined as Mean or Average of the square of the difference between actual and estimated values.

Related searches to weighted mse loss

- weighted mse loss pytorch

- loss vs mse

- weighted mse formula

- why cross entropy loss is better than mse

- pixel wise mse loss pytorch

- how is mse calculated

- weighted mean squared error

- pixel wise mse loss

- pytorch mse loss implementation

- what does mse calculate

- pytorch mse loss

- weighted squared error loss function

- pytorch weighted mse loss

- weighted mse python

- mse loss formula

- weighted mse loss keras

Information related to the topic weighted mse loss

Here are the search results of the thread weighted mse loss from Bing. You can read more if you want.

You have just come across an article on the topic weighted mse loss. If you found this article useful, please share it. Thank you very much.