Are you looking for an answer to the topic “validation loss“? We answer all your questions at the website Chambazone.com in category: Blog sharing the story of making money online. You will find the answer right below.

On the contrary, validation loss is a metric used to assess the performance of a deep learning model on the validation set. The validation set is a portion of the dataset set aside to validate the performance of the model.The training loss indicates how well the model is fitting the training data, while the validation loss indicates how well the model fits new data.It is the sum of errors made for each example in training or validation sets. Loss value implies how poorly or well a model behaves after each iteration of optimization. An accuracy metric is used to measure the algorithm’s performance in an interpretable way.

What is training and validation loss?

The training loss indicates how well the model is fitting the training data, while the validation loss indicates how well the model fits new data.

What is validation Loss and Validation accuracy?

It is the sum of errors made for each example in training or validation sets. Loss value implies how poorly or well a model behaves after each iteration of optimization. An accuracy metric is used to measure the algorithm’s performance in an interpretable way.

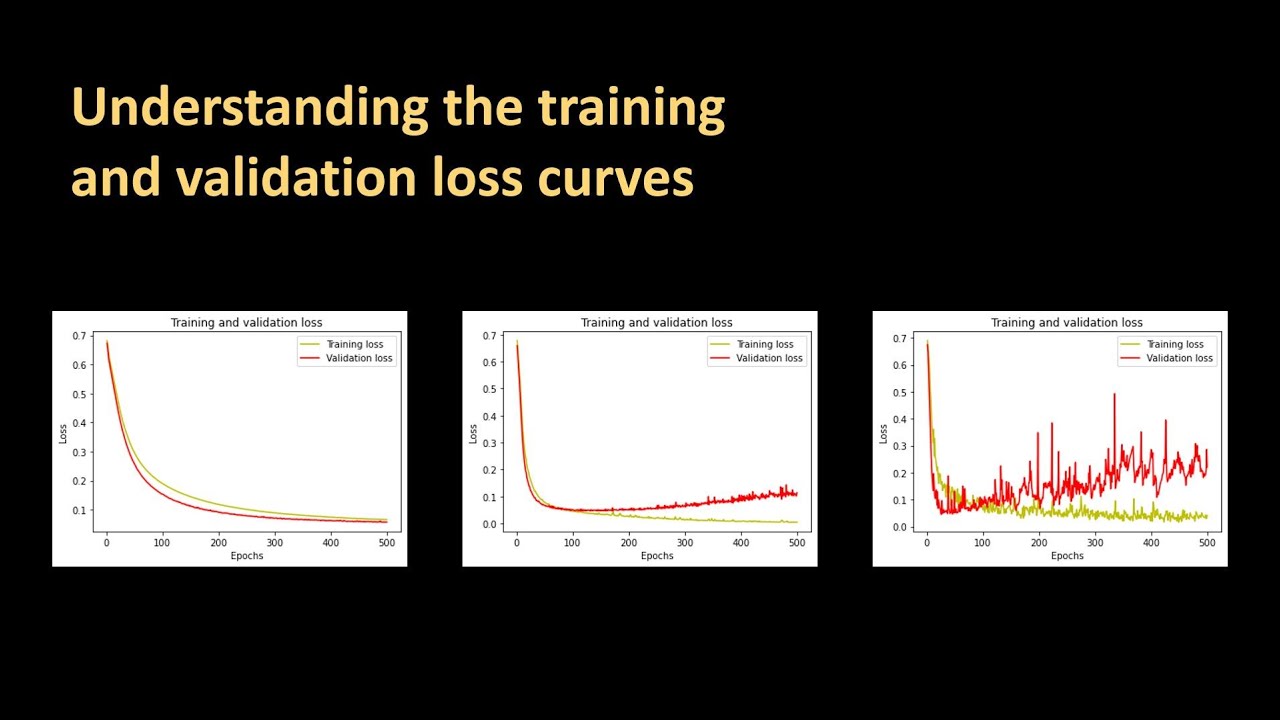

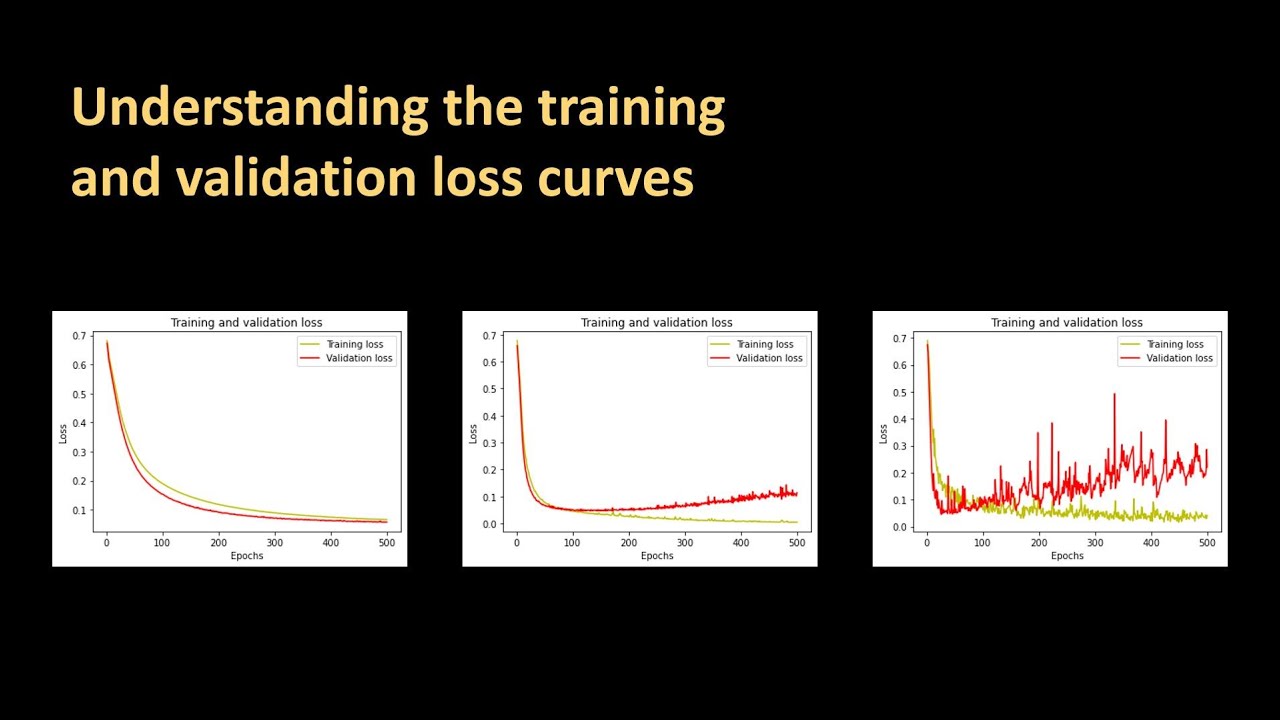

154 – Understanding the training and validation loss curves

Images related to the topic154 – Understanding the training and validation loss curves

What does lower validation loss mean?

If your training loss is much lower than validation loss then this means the network might be overfitting . Solutions to this are to decrease your network size, or to increase dropout. For example you could try dropout of 0.5 and so on. If your training/validation loss are about equal then your model is underfitting.

Is lower validation loss better?

Just because your model learns from the training set doesn’t mean its performance will be better on it. Sometimes data scientists come across cases where their validation loss is lower than their training loss.

What is validation loss for?

On the contrary, validation loss is a metric used to assess the performance of a deep learning model on the validation set. The validation set is a portion of the dataset set aside to validate the performance of the model.

Can validation loss be greater than 1?

Typically the validation loss is greater than training one, but only because you minimize the loss function on training data. I recommend to use something like the early-stopping method to prevent the overfitting. The results of the network during training are always better than during verification.

What is ML loss?

Loss is the penalty for a bad prediction. That is, loss is a number indicating how bad the model’s prediction was on a single example. If the model’s prediction is perfect, the loss is zero; otherwise, the loss is greater.

See some more details on the topic validation loss here:

Your validation loss is lower than your training loss? This is why!

Symptoms: validation loss is consistently lower than the training loss, the gap between them remains more or less the same size and training …

Definition of validation loss

“Validation loss” is the loss calculated on the validation set, when the data is split to train / validation / test sets using …

Why is my validation loss lower than my training loss?

The final most common reason for validation loss being lower than your training loss is due to the data distribution itself. Consider how your …

How to Handle Overfitting in Deep Learning Models

We can identify overfitting by looking at validation metrics like loss or accuracy. Usually, the validation metric stops improving after a …

What is epoch loss?

Epoch: In terms of artificial neural networks, an epoch refers to one cycle through the full training dataset. Usually, training a neural network takes more than a few epochs. Loss: A scalar value that we attempt to minimize during our training of the model.

What is CNN loss layer?

Loss is nothing but a prediction error of Neural Net. And the method to calculate the loss is called Loss Function. In simple words, the Loss is used to calculate the gradients. And gradients are used to update the weights of the Neural Net. This is how a Neural Net is trained.

How do I fix overfitting?

- Reduce the network’s capacity by removing layers or reducing the number of elements in the hidden layers.

- Apply regularization , which comes down to adding a cost to the loss function for large weights.

- Use Dropout layers, which will randomly remove certain features by setting them to zero.

CS 152 NN—2: Intro to ML—Low validation loss, high training loss

Images related to the topicCS 152 NN—2: Intro to ML—Low validation loss, high training loss

Why is my validation loss fluctuating?

Your validation loss is varying wildly because your validation set is likely not representative of the whole dataset. I would recommend shuffling/resampling the validation set, or using a larger validation fraction.

How do you increase validation accuracy?

One of the easiest ways to increase validation accuracy is to add more data. This is especially useful if you don’t have many training instances. If you’re working on image recognition models, you may consider increasing the diversity of your available dataset by employing data augmentation.

How do I reduce validation error?

- Data Preprocessing: Standardizing and Normalizing the data.

- Model compelxity: Check if the model is too complex. Add dropout, reduce number of layers or number of neurons in each layer.

- Learning Rate and Decay Rate: Reduce the learning rate, a good starting value is usually between 0.0005 to 0.001.

What is a dropout layer?

The Dropout layer randomly sets input units to 0 with a frequency of rate at each step during training time, which helps prevent overfitting. Inputs not set to 0 are scaled up by 1/(1 – rate) such that the sum over all inputs is unchanged.

What is accuracy and validation accuracy?

Valid Accuracy: How the model is able to classify the images with the validation dataset. ( A validation dataset is a sample of data held back from training your model that is used to give an estimate of model skill while training the model)

What is the use of regularization?

Regularization refers to techniques that are used to calibrate machine learning models in order to minimize the adjusted loss function and prevent overfitting or underfitting. Using Regularization, we can fit our machine learning model appropriately on a given test set and hence reduce the errors in it.

Why is validation loss smaller than training loss?

The final most common reason for validation loss being lower than your training loss is due to the data distribution itself. Consider how your validation set was acquired: Can you guarantee that the validation set was sampled from the same distribution as the training set?

What is entropy loss?

Cross-Entropy Loss Function. Also called logarithmic loss, log loss or logistic loss. Each predicted class probability is compared to the actual class desired output 0 or 1 and a score/loss is calculated that penalizes the probability based on how far it is from the actual expected value.

Train, Test, Validation Sets explained

Images related to the topicTrain, Test, Validation Sets explained

What is regression loss?

Loss functions for regression analysesedit

A loss function measures how well a given machine learning model fits the specific data set. It boils down all the different under- and overestimations of the model to a single number, known as the prediction error.

What is train loss?

Train Loss is the value of the objective function that you are minimizing. This value could be a positive or negative number, depending on the specific objective function of your training data. The training loss is calculated over the entire training dataset.

Related searches to validation loss

- validation loss fluctuating

- validation loss higher than training loss

- Train loss and validation loss

- training loss vs validation loss

- validation loss increasing

- train loss and validation loss

- learning rate and validation loss

- validation loss lower than training loss

- how to reduce validation loss in cnn

- training and validation loss curves

- training loss decrease validation loss increase

- validation loss increases then decreases

- validation loss not changing

- pytorch plot training and validation loss

- validation loss increasing after first epoch

- how to reduce validation loss

- validation loss vs training loss

- validation loss oscillating

- pytorch validation loss

- validation loss pytorch

- high validation loss

- keras validation loss

- validation loss python

- validation loss not decreasing

- training and validation loss diverge

- plot training and validation loss pytorch

Information related to the topic validation loss

Here are the search results of the thread validation loss from Bing. You can read more if you want.

You have just come across an article on the topic validation loss. If you found this article useful, please share it. Thank you very much.