Are you looking for an answer to the topic “xgboost python feature importance“? We answer all your questions at the website Chambazone.com in category: Blog sharing the story of making money online. You will find the answer right below.

Keep Reading

Table of Contents

Does XGBoost give feature importance?

Xgboost is a gradient boosting library. It provides parallel boosting trees algorithm that can solve Machine Learning tasks. It is available in many languages, like: C++, Java, Python, R, Julia, Scala.

How is feature importance calculated XGBoost?

Importance is calculated for a single decision tree by the amount that each attribute split point improves the performance measure, weighted by the number of observations the node is responsible for.

Practical XGBoost in Python – 2.1 – Spotting Most Important Features

Images related to the topicPractical XGBoost in Python – 2.1 – Spotting Most Important Features

Does XGBoost require feature selection?

Feature selection: XGBoost does the feature selection up to a level. In my experience, I always do feature selection by a round of xgboost with parameters different than what I use for the final model.

What are the advantages of XGBoost?

- It is Highly Flexible.

- It uses the power of parallel processing.

- It is faster than Gradient Boosting.

- It supports regularization.

- It is designed to handle missing data with its in-build features.

- The user can run a cross-validation after each iteration.

How many features can XGBoost handle?

To simulate the problem, I re-built an XGBoost model for each possible permutation of the 4 features (24 different permutations) with the same default parameters. You can see in the figure below that the MSE is consistent.

Does XGBoost require scaling?

Important Points to Remember:

There are some algorithms like Decision Tree and Ensemble Techniques (like AdaBoost and XGBoost) that do not require scaling because splitting in these cases are based on the values. It is important to perform feature scaling post splitting the data into training and testing.

How do you calculate feature important?

Feature importance is calculated as the decrease in node impurity weighted by the probability of reaching that node. The node probability can be calculated by the number of samples that reach the node, divided by the total number of samples. The higher the value the more important the feature.

See some more details on the topic xgboost python feature importance here:

Feature Importance and Feature Selection With XGBoost in …

The XGBoost library provides a built-in function to plot features ordered by their importance. … For example, below is a complete code listing …

Xgboost Feature Importance Computed in 3 Ways with Python

Xgboost is a gradient boosting library. It provides parallel boosting trees algorithm that can solve Machine Learning tasks.

How to get feature importance in xgboost? – python – Stack …

In your code you can get feature importance for each feature in dict form: bst.get_score(importance_type=’gain’) >>{‘ftr_col1’: …

Feature Importance Using XGBoost (Python Code Included)

And here it is. In this piece, I am going to explain how to generate feature importance plots from XGBoost using tree-based importance, permutation importance …

Why does XGBoost give different results?

It’s because machine learning results are hard to replicate.

What is feature importance gain?

“The Gain implies the relative contribution of the corresponding feature to the model calculated by taking each feature’s contribution for each tree in the model. A higher value of this metric when compared to another feature implies it is more important for generating a prediction.

Which feature selection method is best?

Exhaustive Feature Selection– Exhaustive feature selection is one of the best feature selection methods, which evaluates each feature set as brute-force. It means this method tries & make each possible combination of features and return the best performing feature set.

Can XGBoost take categorical features in input?

Unlike CatBoost or LGBM, XGBoost cannot handle categorical features by itself, it only accepts numerical values similar to Random Forest. Therefore one has to perform various encodings like label encoding, mean encoding or one-hot encoding before supplying categorical data to XGBoost.

Does feature selection improve performance?

Three key benefits of performing feature selection on your data are: Reduces Overfitting: Less redundant data means less opportunity to make decisions based on noise. Improves Accuracy: Less misleading data means modeling accuracy improves. Reduces Training Time: Less data means that algorithms train faster.

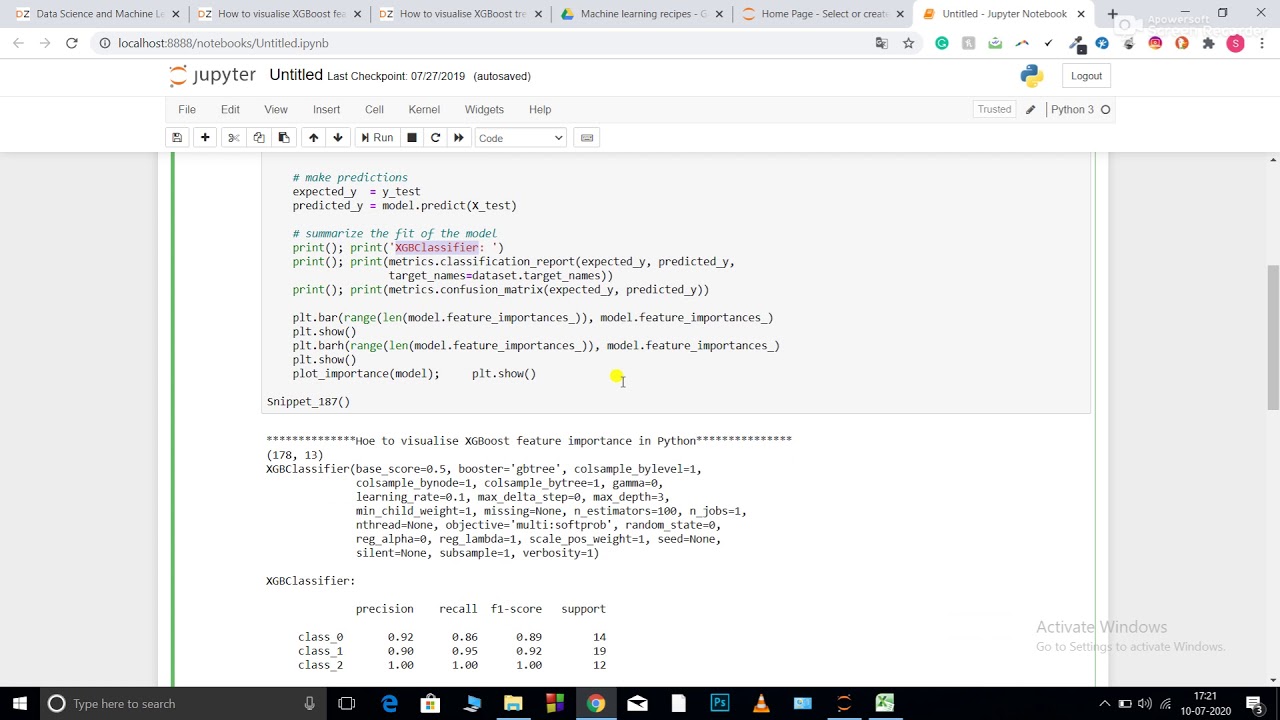

Visualize the feature importance of the XGBoost model in Python

Images related to the topicVisualize the feature importance of the XGBoost model in Python

What are the advantages and disadvantages of XGBoost?

- Advantages: Effective with large data sets. …

- Disadvantages: Tree algorithms such as XGBoost and Random Forest can over-fit the data, especially if the trees are too deep with noisy data.

- Reference.

How do you explain XGBoost in interview?

XGBoost is essentially the same thing as Gradient Boost, but the main difference is how the residual trees are built. With XGBoost, the residual trees are built by calculating similarity scores between leaves and the preceding nodes to determine which variables are used as the roots and the nodes.

Is XGBoost faster than random forest?

For most reasonable cases, xgboost will be significantly slower than a properly parallelized random forest. If you’re new to machine learning, I would suggest understanding the basics of decision trees before you try to start understanding boosting or bagging.

Can XGBoost handle continuous data?

Yes, it’s well-known that a tree(/forest) algorithm (xgboost/rpart/etc.) will generally ‘prefer’ continuous variables over binary categorical ones in its variable selection, since it can choose the continuous split-point wherever it wants to maximize the information gain (and can freely choose different split-points …

Can XGBoost be used for classification?

XGBoost (eXtreme Gradient Boosting) is a popular supervised-learning algorithm used for regression and classification on large datasets. It uses sequentially-built shallow decision trees to provide accurate results and a highly-scalable training method that avoids overfitting.

Can XGBoost handle factors?

If booster==’gbtree’ (the default), then XGBoost can handle categorical variables encoded as numeric directly, without needing dummifying/one-hotting.

Should I normalize data for gradient boosting?

Boosting trees is about building multiple decision trees. Decision tree doesn’t require feature normalization, that’s because the model only needs the absolute values for branching.

Is XGBoost robust to outliers?

Like any other boosting method, XGB is sensitive to outliers.

Do you need to normalize data for gradient boosting?

No. It is not required.

Does feature importance add up to 1?

Then, the importances are normalized: each feature importance is divided by the total sum of importances. So, in some sense the feature importances of a single tree are percentages. They sum to one and describe how much a single feature contributes to the tree’s total impurity reduction.

Python Tutorial : When should I use XGBoost?

Images related to the topicPython Tutorial : When should I use XGBoost?

How do you interpret a feature important?

- Calculate the mean squared error with the original values.

- Shuffle the values for the features and make predictions.

- Calculate the mean squared error with the shuffled values.

- Compare the difference between them.

What is F score in feature importance?

In other words, F-score reveals the discriminative power of each feature independently from others. One score is computed for the first feature, and another score is computed for the second feature. But it does not indicate anything on the combination of both features (mutual information).

Related searches to xgboost python feature importance

- install xgboost python

- importance type xgboost

- Install xgboost python

- xgboost regression python feature importance

- get feature importance xgboost python

- xgboost feature importance with names

- get feature importance xgboost

- xgboost feature importance ‘gain

- feature importance

- plot importance

- python xgboost feature importance plot

- xgboost feature importance top 10

- what is feature importance in xgboost

- xgboost feature importance weight

- xgboost feature importance list

- feature importance xgboost

- xgboost feature importance gain

- xgboost feature importance kaggle

- Plot importance

- python feature importance plot

- Feature importance

Information related to the topic xgboost python feature importance

Here are the search results of the thread xgboost python feature importance from Bing. You can read more if you want.

You have just come across an article on the topic xgboost python feature importance. If you found this article useful, please share it. Thank you very much.